SC'20 Recap

The HPC industry's biggest conference, SC, was held virtually over the last two weeks. Although the original plan to hold it in Atlanta was supplanted by all-virtual format, it still managed to be a whirlwind show full of product showcases, research presentations, and interesting talks, panels, and workshops. The virtual format certainly wasn't the same as attending in-person, but some of the conference buzz and tone could still be sensed by following the #SC20 tag on Twitter.

As with SC'19, the conference seemed subdued in part due to the fact that many attendees were still being pulled away by their daily lives while attending and in part because the HPC community is still waiting for exascale to finally get here. The community's conversion to remote work has also smeared a lot of the usual vendor briefings and big announcements out over the entire five-month period since ISC'19, causing most of the hot news at SC this year to seem incremental over years past.

Still, I picked up on a few themes that I thought were noteworthy, and what follows is a recap of some of the highlights from the conference as I saw them.

All the standard disclaimers apply to the remainder of this post: these are just my personal opinion and do not represent the viewpoint of anyone other than me. I'm not an expert on many (most?) of these topics, so my observations may be misinformed or downright wrong--feel free to get in touch if I stand to be corrected. Also bear in mind that what I find interesting is colored by my day job as a storage architect; I don't pay close attention to the scientific or application spaces in HPC and instead focus on hardware, architecture, systems design, integration, and I/O. As such, I'm sure I missed all sorts of topics that others find exciting.

Table of Contents

- Big Splashes

- High-level Themes

- Actual Future Directions

- Spectrum Scale User Group vs. Lustre BOF

- IO-500 BOF

- Concluding Thoughts

Big Splashes

Although there weren't any earth-shattering announcements this year, there were a few newsworthy developments that received a healthy amount of press attention.

What's new

RIKEN's Fugaku machine made its debut at ISC'20 in June this year, but I felt a lot of its deserved fanfare was muted by the the newness of the pandemic and the late-binding decision to convert ISC'20 to being all remote. SC'20 was when Fugaku got to really shine; it improved benchmark results for HPL, HPCG, and Graph500 relative to its ISC'20 numbers:

But RIKEN and Fujitsu had a number of early science success stories to showcase around how the machine was being cited in scientific studies towards better understanding COVID-19.

Intel announced the Ice Lake Xeon architecture as well and put a lot of marketing behind it. And by itself, Ice Lake is a major advancement since it's Intel's first server part that uses their 10 nm process and provides a PCIe Gen4 host interface, and it includes support for 2nd generation 3D XPoint DIMMs (Barlow Pass) and 8 DDR4 memory channels.

Unfortunately, Ice Lake is late to the party in the context of its competition; Intel's benchmark results position Ice Lake as a competitor to AMD Rome which matches Ice Lake's 8-channel/PCIe Gen4-based platform despite being over a year old at this point. For reference:

| Intel Ice Lake[1] | AMD Rome[2] | |

|---|---|---|

| Shipping | 4Q2020 | 3Q2019 |

| Cores | up to 32 | up to 64 |

| Memory | 8x DDR4-3200 | 8x DDR4-3200 |

| Host Interface | ?x PCIe Gen4 | 128x PCIe Gen4 |

By the time Ice Lake starts shipping, AMD will be launching its next-generation Milan server processors, so it's difficult to get excited about Ice Lake if one isn't married to the Intel software ecosystem or doesn't have specific use for the new AVX512 instructions being introduced.

The Intel software ecosystem is not nothing though, and Intel does seem to remain ahead on that front. Intel had its inaugural oneAPI Dev Summit during SC'20, and although I don't follow the application developer space very closely, my perception of the event is that it focused on showcasing the building community momentum around oneAPI rather than delivering splashy announcements. That said, this oneAPI Dev Summit seems to have sucked the air out of the room for other Intel software-centric events; IXPUG had no discernible presence at SC'20 despite IXPUG changing its name from "Intel Xeon Phi User Group" to "Intel eXtreme Performance User Group" when Xeon Phi was sunset. However one dev event is better than none; I did not hear of any equivalent events hosted by AMD at SC'20.

NVIDIA also announced new SKU of its Ampere A100 data center GPU with a whopping 80 GB of HBM2. This was surprising to me since the A100 with 40 GB of HBM2 was only first unveiled two quarters ago. The A100 chip itself is the same so there's no uptick in flops; they just moved to HBM2e stacks which allowed them to double the capacity and get an incremental increase in memory bandwidth.

So, who's this part for? Doubling the HBM capacity won't double the price of the GPU, but the A100-80G part will undoubtedly be more expensive despite there being no additional FLOPS. My guess is that this part was released for

- People who just want to fit bigger working sets entirely in GPU memory. Larger deep learning models are the first thing that come to my mind.

- People whose applications can't fully utilize A100's flops due to suboptimal memory access patterns; higher HBM2e bandwidth may allow such apps to move a little higher along the roofline.

- People who may want to purchase AMD's next-generation data center GPU (which will undoubtedly also use HBM2e) but probably be released before the follow-on to Ampere is ready.

NVIDIA also upgraded its Selene supercomputer to include these A100-80G parts, moving its Top500 position to #5 and demonstrating that these parts exist and deliver as advertised.

What's missing

HPE/Cray was pretty quiet on announcements, especially after two SCs in a row with Shasta (now "Cray EX") news. HPE undoubtedly has its head down readying its first large Shasta installations, and given the fact that the primary manufacturing facilities for Cray Shasta are located in a COVID hotspot in the US, maybe this was to be expected--this autumn has not been the time to rush anything.

That said, we know that Cray EX systems have been shipping since July 2020:

A wee video for Friday afternoon. Watch the installation of the four-cabinet Shasta Mountain system, the first phase of the @ARCHER2_HPC 23-cabinet system.https://t.co/DqYRDJi39B@Cray_Inc pic.twitter.com/8D4Hv5Msmt

— EPCCed (@EPCCed) July 31, 2020

So it is a little surprising that HPE was not promoting any early customer or science success stories yet, and the only Cray EX/Shasta system to appear on Top500 was Alps, a modest 4.6 PF Rome-based system at CSCS. Next year--either at the all-virtual ISC'21 or SC'21--will likely be the year of Cray EX.

Intel was also pretty quiet about Aurora, perhaps for the same reason as HPE/Cray. The fact that Intel's biggest hardware news was around Ice Lake suggests that Intel's focus is on fulfilling the promises of disclosures they made at SC'19 rather than paving new roads ahead. There was a healthy amount of broad-stroke painting about exascale, but aside from the oneAPI buzz I mentioned above, I didn't see anything technically substantive.

Sadly, IBM was the most quiet, and it was perhaps the most prominent appearance of IBM in this year's official program was inwinning the Test of Time Award for the Blue Gene/L architecture. It was almost a eulogy of IBM's once-dominant position at the forefront of cutting-edge HPC research and development, and this feeling was perhaps underscored by the absence of perhaps the most noteworthy IBMer involved in the creation of Blue Gene. This isn't to say IBM had no presence at SC'20 this year; it's just clear that their focus is on being at the forefront of hybrid cloud and cognitive computing rather than supercomputing for supercomputing's sake.

High-level Themes

The most prevalent theme that I kept running into was not the technology on the horizon, but rather the technology further off. There were a few sessions devoted to things like "Post Moore's Law Devices" and "Exotic Technology" in 2035, and rather than being steeped in deep technical insight, they leaned more towards either recitations of similar talks given in years past (one speaker presented slides that were literally five years old)or outlandish claims that hinged on, in my opinion, incomplete views of how technology evolves.

I found the latter talks a bit disturbing to find in the SC program since they contained very little technical insight and seemed more focused on entertainment value--the sort of thing usually relegated to post-conference hotel bar conversation. So rather than repeat their predictions as gospel, I'll present my critical take on them. I realize that it's far easier for me to throw stones at people at the top of the hill than to climb there myself, and I'm perfectly willing to accept that my opinions below are completely wrong. And, if you'd like to throw stones at me yourself, I contributed my position to a panel on tiered storage this year against which all are welcome to argue.

Computing Technologies Futures

This year's focus on far-flung technologies at SC made me wonder--are these sorts of talks filling out the program because there's no clear path beyond exascale? Is it possible that the HPC community's current focus on climbing the exascale mountain is taking our minds off of the possibility that there's nothing past that mountain except desert?

For example, Shekhar Borkar gave his five-year outlook on memory technologies:

Memory Technology Score Card

— HPC Guru (@HPC_Guru) November 18, 2020

According to Shekhar it is SRAM, DRAM, Flash & PCM for the next 5 years.

Other technologies are OK for research but not ready for prime time yet.#SC20 #HPC #AI pic.twitter.com/q7sjCp2DFH

SRAM and DRAM are decades-old staples in the HPC industry, and even NAND has been used in production HPC for a decade now. The statement that PCM may be useful in the next five years is quite striking since PCM products have been shipping in volume since 2017--from this, I take that the future is going to look an awful lot like the present on the memory and storage front. The biggest change, if any, will likely be the economics of NAND and 3D integration evolving to a point where we can afford more all-flash and all-HBM systems in the coming years.

On the computational front, many of the soothsayers leaned heavily on using cryogenics for the post-Moore's Law chip designs. Ultra-low-temperature CMOS and superconductors for supercomputers are a low-hanging fruit to pick when conjecturing about the future since (1) their physics are well understood, and (2) they have clear and nonlinear benefits over the CMOS technologies baked into chips today, as shown by Borkar:

The problem, of course, is that you won't ever be able to buy a cryogenic supercomputer unless a company can make enough money selling a cryogenic supercomputer to (1) pay down the non-recurring engineering costs, (2) recuperate the costs of productizing the design, and (3) make enough profit to make the shareholders or venture capitalists underwriting (1) and (2) happy.

Realize that cryogenics at scale are dangerous and messy--compared to water cooling, there is no municipal supply of liquid helium, and the market for building pumps and piping for cryogenic plumbing is virtually zero compared to water-based plumbing. When you add the fact that the vast majority of data centers--including the hyperscalers who drive much of the data center market--don't want to touch water-cooled infrastructure, the HPC market would have to bear the cost of cryogenic computing at-scale entirely on its own for the foreseeable future.

That all said, remember that this is just my own personal opinion. For a helpful and mostly objective perspective, @HPC_Guru posted a thread that captures the general sentiment of these sessions.

For the sake of entertainment, I'll include some of the more outlandish slides that I saw on this topic.

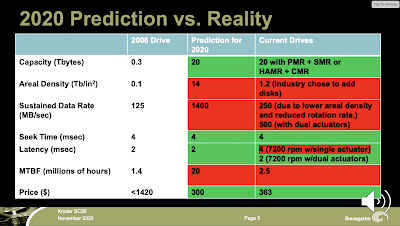

Erik DeBenedictis had the following predictions of the future in 2006 for 2020:

DeBenedictis' primary oversight in this prediction was failing to see the end of Dennard scaling due to physics. Had power consumption continued to drop with node size, we could very well be at 20 GHz today, and the fact that his core counts, flops/socket, and system peak were reasonable is a testament to good forecasting. However Dennard scaling is what forced CPUs towards longer vectors (which is how a 40-core socket can still get 1.6 TF without running at 20 GHz) and what motivated the development of the more power-efficient architecture of GPGPUs. DeBenedictis' predictions for the future, though, don't look as reasonable to me:

While quantum/classical hybrid machines may very well exist in 2035, they aren't exactly solving the same problems that today's supercomputers can. In a sense, he chose to make a meta-prediction that science will change to fit the technology available--or perhaps he chose to redefine supercomputing to mean something even more niche than it does today.

Thomas Sterling also gave his 200 GHz yottaflop prediction:

which hasn't changed since he predicted a superconducting yottaflop at ISC'18. Unlike DeBenedictis, Sterling chose not to redefine HPC to fit the available technology but instead predict a physical, economical, and practical fantasy about the future. Not there's anything wrong with that. Everyone's got to have a goal.

Kathy Yelick offered the most pragmatic 15-year prediction:

and I can't poke holes in any of these predictions because there is a clear path from today to this vision for the future. That said, if you actually attach flops and hertz to these predictions, the future does not look nearly as exciting as superconducting yottaflops do.

As dissatisfying as it may be, Shekhar Borkar had a slide that is probably the pathway into the future of HPC:

The only way the future of HPC will be predictable is if you're willing to define what HPC is to fit whatever the available technologies are. Yelick expressed the same sentiment with her "Not sure, but it will be called OpenMP" bullet, and to his credit, Sterling himself did this with his Beowulf cluster. If the market just gives you a pile of parts, strap it together and call it HPC. And if transistor scaling has no more steam, find something that still has legs and call it Moore's Law.

Storage Technologies Futures

On the storage front, the predictions from 2006 for 2020 storage technology were pretty reasonable as well. Dr. Mark Kryder (of Kryder's Law fame) predict that Kryder's Law would hold:

However he mispredicted how it would hold--his assumption was that surface bit density would keep skyrocketing, and this is why his bandwidth number was so far off. Packing magnetic bits ever more closely together turns out to be a very difficult problem, so the hard disk drive industry chose to increase capacities by solving the easier problem of packing more platters into a single 3.5" half-height form factor.

The flash predictions of Richard Freitas (who passed away in 2016) were also very reasonable:

His biggest miscalculation was not realizing that solid-state storage would bifurcate into the two tiers we now call RAM and flash. He predicted "storage class memory" based on the assumption that it would be block-based (like flash) but use a simple and low-latency bus (like RAM). We enjoy higher bandwidth and capacity than his prediction due to the increased parallelism and lower cost of NAND SSDs, but relying on PCIe instead of a memory bus and the low endurance of NAND (and therefore significant back-end data management and garbage collection) drove up the latency.

Predictions for the future were more outlandish. Kryder's prediction for 2035 was a bit too much for me:

Extrapolating Kryder's Law another 15 years puts us at 1.8 petabytes per hard drive, but this rests on the pretty shaky foundation that there's something holy about hard disk drive technology that will prevent people from pursuing different media. Realizing this requires two things to be true:

- The HDD industry remains as profitable in the next fifteen years as it is today. Seeing as how parts of the HDD industry are already going extinct due to flash (remember when personal computers had hard drives?) and hyperscale is taking more ownership of drive controller functionality and eating into manufacturers' margins, I just don't see this as being likely.

- The cost to develop the required recording techniques (two-dimensional magnetic recording and bit-patterned media) is both as fast and as cheap as HAMR was. If it's not, see #1 above--there won't be the money or patience to sustain the HDD market.

This doesn't even consider the appeal of dealing with 1.8 PB drives as a system architect; at Kryder's forecasted numbers, it would take eleven days to fill, rebuild, or scrub one of these drives. As a system designer, why would I want this? Surely there are better ways to assemble spindles, motors, actuators, and sheet metal to increase my bandwidth and reduce my blast radius than cramming all these platters into a 3.5" form factor.

My bet (and note--I was not invited to contribute it, as I am not an expert!) is that the HDD market will continue to slow down as it falls off the Kryder Law curve due to scaling limitations. This will result in a slow but downward spiral where R&D slows because it is starved of funding, and funding is starved because HDDs fall further and further off of the economics curve. HDDs won't be gone by 2035, but they will fit in the small gap between that exists between low-cost write-once-read-many media (like ultra-dense trash flash) and low-cost write-once-read-never media (like tape).

Kryder essentially acknowledged that his projection relies on something intrinsically special about HDDs; he commented that the technological advancements required to reach 1.8 PB HDDs will happen because HDD engineers don't want to lose their jobs to the flash industry. Personally, I'd take a new job with an exciting future over a gold watch any day of the week. Maybe that's the millennial in me.

I found this general theme of wildly projecting into the future rather yucky this SC, and I won't miss it if it's gone for another fifteen years. By their very nature, these panels are exclusive, not inclusive--someone literally has to die in order for a new perspective to be brought on board. There was an element to this in the Top500 BOF as well, and one slide in particular made me cringe at how such a prominent good-ol-boys club was being held up before the entire SC community. These sorts of events are looking increasingly dated and misrepresentative of the HPC community amidst the backdrop of SC putting diversity front and center.

Actual Future Directions

Although wild projections of the future felt like fashionable hot topics of the year, a couple of previous hot topics seemed to be cooling down and transitioning from hype to reality. Two notable trends popped out at me: the long-term relationship between HPC and AI and what disaggregation may really look like.

The Relationship of HPC and AI

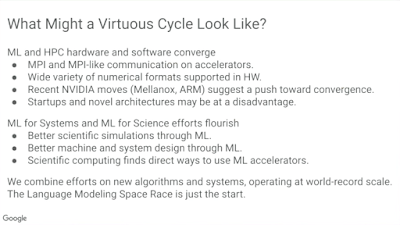

As has been the norm for a few years now, deep learning (now more broadly "AI") was peppered across the SC program this year. Unlike previous years, though, the AI buzz seemed to be tempered by a little more pragmatism as if it were coming down the hype curve. Perhaps the best talk that captured this was an invited talk by Cliff Young of Google about the possibility of aVirtuous Cycle of HPC and AI.

The "convergence of HPC and AI" has been talked about in the supercomputing community since HPC-focused GPUs were reinvented as an AI accelerator. If you look at who's been selling this line, though, you may realize that the conversation is almost entirely one-way; the HPC industry pines for this convergence. The AI industry, frankly, doesn't seem to care what the HPC industry does because they're too busy monetizing AI and bankrolling the development of the N+1th generation of techniques and hardware to suit their needs, not those of the HPC industry.

Dr. Young's talk closed this loop by examining what the AI industry can learn from HPC; the so-called "Cambrian explosion" of accelerators is somewhere near its peak which has resulted in a huge architectural design space to explore:

When cast this way, HPC actually has a lot of experience in driving progress in these areas; the 4x4 systolic array design point has its genesis in the HPC-specific MIC architecture, and the HPC industry drove the productization of the DRAM-backed HBM memory hierarchy implemented by IBM for the Summit and Sierra systems. These HPC-led efforts presumably contributed to Google's ability to bet on much larger array sizes starting with its first-generation TPU.

In addition, it sounds like training has begun to reach some fundamental limits of data-parallel scalability:

HPC has long dealt with the scalability limitations of allreduce by developing technologies like complex low- and high-radix fabric topologies and hardware offloading of collective operations. Whether the AI industry simply borrows ideas from HPC and implements its own solutions or contributes to existing standards remains to be seen, but standards-based interfaces into custom interconnects like AWS Elastic Fabric Adapter are a promising sign.

Another "hard problem" area in which HPC is ahead is in sparse matrices:

Young's position is that, although "sparse" means different things to AI (50-90% sparse) than it does to HPC (>95% sparse), HPC has shown that there are algorithms that can achieve very high fractions of peak performance on sparse datasets.

His concluding slide was uplifting in its suggestion that the HPC-AI relationship may not be strictly one-way forever:

He specifically called out promise in the use of mixed precision; AI already relies on judicious use of higher-precision floating point to stabilize its heavy use of 16-bit arithmetic, and scientific computing is finding algorithms in which 16-bit precision can be tolerated.

Being more hardware- and infrastructure-minded myself, I was particularly surprised to see this nod to liquid cooling early on:

Google's TPU v3 was its first foray into direct liquid cooling, a data center technology that HPC has been using for decades (think: Cray-2's waterfall). While this may not seem spectacular to any PC enthusiast who's done liquid cooling, the difficulty of scaling these systems up to rack-, row-, and data center-scale are not always linear. Young explicitly acknowledged HPC's expertise in dealing with liquid-cooled infrastructure, and if hyperscale is driven in this direction further, HPC will definitely benefit from the advances that will be enabled by a new and massive market driver.

Disaggregation in Practice

The promise of disaggregation--having pools of CPU, persistent memory, GPUs, and flash that you can strap together into a a single node--has been around for a long time and had steadily gained attention as a potential candidate for an exascale technology. However I don't think there was a realistic hope for this until IBM's AC922 node--the one that comprises the Summit and Sierra systems--hit the market and demonstrated a unified, hardware-enabled coherent memory space across CPUs and GPUs.

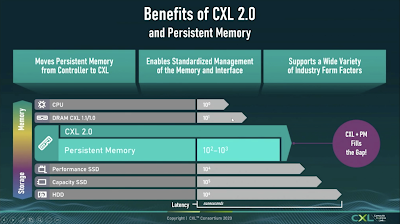

The actual story there wasn't great though; coherence between CPU and GPU was enabled using NVIDIA's proprietary NVLink protocol while the CPU and NIC were connected via a different coherence protocol, OpenCAPI, over the same physical interface. CCIX and GenZ also emerged as high-speed protocols for cache coherence and disaggregation, and the story only got worse when Intel put forth CXL as its standard for coherence and disaggregation.

Fortunately, the dust is now settling and it appears that CXL and GenZ are emerging at the front of the pack. There was an amicable panel session where members of these two consortia presented a unified vision for CXL and GenZ which almost appeared credible: CXL would be the preferred protocol for inside a chassis or rack, and GenZ would be the preferred protocol between chassis and racks. Key features of the finalized CXL 2.0 standard were unveiled which largely revolved around support for CXL switches:

These switches function not only as port expanders to allow many devices to plug into a single host, but also as true switches that enable multi-root complexes that pool hosts and devices to dynamically map devices to hosts using CXL's managed hotplug capability. There's also support for a CXL Fabric Manager that moderates something that looks a lot like SR-IOV; a single physical device can be diced up and mapped to up to sixteen different hosts. At its surface, this looks like a direct, open-standard competitor to NVLink, NVSwitch, and MIG.

What these new CXL switches do not support is inter-switch linking; all CXL devices much share a single switch to maintain the low latency for which CXL was designed. This is where GenZ fits in since it is a true switched fabric, and this is why the CXL and GenZ consortia have signed a memorandum of understanding (MOU) to design their protocols towards mutual compatibility and interoperability so that the future of disaggregated systems will be composed of pooled CXL devices bridged by a GenZ fabric. A direct parallel was drawn to PCIe and Ethernet, where a future disaggregated system may see CXL assume the role of PCIe, and GenZ may assume the role currently filled by Ethernet.

When it came time for Q&A, the panel got more interesting.

A lot of the audience questions revolved around what standards CXL is planning to wipe off the face of the planet. The Intel (and CXL) panelist, Debendra Das Sharma, fielded the bulk of these questions and made it clear that

(1) CXL will not replace DDR as a local memory interface; it is a complementary technology. This sounded a little disingenuous given the following slide was also shown to highlight CXL 1.0's latency being on par with DRAM latency:

(2) CXL will not replace PCIe as a host I/O interface; is a superset of PCIe and many devices will remain happy with PCIe's load/store semantics. Of course, this is what I would say too if I had effective control over both the CXL standard and the PCIe SIG.

When asked directly if Intel had joined the GenZ consortium though, Sharma gave a terse "no" followed by "no comment" as to why. He then immediately followed that with a very carefully crafted statement:

"While we have not joined the GenZ consortium, we are fully supportive of making the CXL enhancements that will help GenZ."

The panelists also commented that the MOU was designed to make transitioning from CXL to GenZ protocols smooth, but when asked exactly how the CXL-to-GenZ bridge would be exposed, Tim Symons (representing Microchip and GenZ) could not offer an answer since this bridging function is still being defined. These sorts of answers left me with the impression that CXL is in the driver's seat and GenZ has been allowed to come along for the ride.

Reading between the lines further, there was a striking absence of HPE people on the panel given the fact that GenZ originated within HPE's "The Machine" project. It remains unclear where GenZ fits now that HPE owns Slingshot, a different high-performance scale-out switched fabric technology. What would be the benefit of having a three-tier Slingshot-GenZ-CXL fabric? If CXL 2.0 adopted a single-hop switch and fabric manager, what's to stop CXL 3.0 from expanding its scope to a higher radix or multi-hop switch that can sensibly interface directly with Slingshot?

Given that CXL has already eaten a part of GenZ's lunch by obviating the need for GenZ host interfaces, I wouldn't be surprised if GenZ eventually meets the same fate as The Machine and gets cannibalized for parts that get split between future versions of Slingshot and CXL. CXL has already effectively killed CCIX, and IBM's decision to join CXL suggests that it may be positioning to merge OpenCAPI's differentiators into CXL after Power10. This is pure speculation on my part though.

Spectrum Scale User Group vs. Lustre BOF

Because SC'20 was smeared over two weeks instead of one, I got to attend both the Lustre BOF and one of the Spectrum Scale User Group (SSUG) sessions. I also came equipped with a much more meaningful technical understanding of Spectrum Scale this year (I've spend the last year managing a group responsible for Spectrum Scale at work), and it was quite fascinating to contrast the two events and their communities' respective priorities and interests.

The Spectrum Scale User Group featured a presentation on "What is new in Spectrum Scale 5.1.0" and the Lustre BOF had its analogous Feature Discussion. I broadly bucketize the new features presented at both events into four categories:

1. Enterprisey features that organizations may care about

For Spectrum Scale, this included support for newer releases of RHEL, SLES, Ubuntu, AIX(!), and Windows (!!). IBM also noted that Spectrum Scale also now supports the zEDC hardware compression unit on the z15 mainframe processor:

The Lustre discussion presented their equivalent OS support slide with a similar set of supported enterprise Linux distributions (RHEL, SLES, Ubuntu). No support for AIX or Z (s390x) though:

If nothing else, this was a reminder to me that the market for Spectrum Scale is a bit broader than just HPC like Lustre is. I have to assume they have enough AIX, Windows, and Z customers to justify the their support for those platforms. That said, wacky features like hardware-assisted compression is not unique to Spectrum Scale on Z; Lustre picked up hardware-assisted compression back in 2017 thanks to Intel.

New improvements to Spectrum Scale's security posture were also presented that were a little alarming to me. For example, one no longer has to add scp and echo to the sudoers file for Spectrum Scale to work (yikes!). There was also a very harsh question from the audience to the effect of "why are there suddenly so many security fixes being issued by IBM?" and the answer was similarly frightening; Spectrum Scale is now entering markets with stringent security demands which has increased IBM's internal security audit requirements, and a lot of new vulnerabilities are being discovered because of this.

It's ultimately a good thing that Spectrum Scale is finding a fixing a bunch of security problems, since the prior state of the practice was just not performing stringent audits. I assume that Lustre's approach to security audits is closer to where Spectrum Scale was in years past, and should Lustre ever enter these "new markets" to compete with Spectrum Scale, I expect a similarly uncomfortable quantity of security notices would come to light. This is all speculative though; the only definite is that IBM is moving GPFS towards role-based access control which is a positive direction.

Overall, Spectrum Scale seemed considerably more focused on developing these enterprisey features than Lustre.

2. Manageability features that administrators may care about

Spectrum Scale also revealed a bunch of smaller features that are nice to have for administrators including

- Faster failing of hung RDMA requests - you can now set a maximum time that an RDMA request can hang (e.g., if an endpoint fails) before its thread is killed by Spectrum Scale itself. This avoids having to wait for lower-level timeouts and seems like a nice-to-have knob for a file system that supports a lot of path and endpoint diversity. Lustre may be ahead on this front with its lnet_transaction_timeout parameter, but it's unclear exactly how these two settings differ.

- Safeguards against administrator error - Spectrum Scale added features that warn the administrator about doing something that may be a mistake, such as accidentally breaking quorum by downing a node or mapping incorrect drive slots to RAID groups. There's not really equivalent functionality in Lustre; these are the places where Lustre solution providers (think HPE/Cray ClusterStor) get to value-add management software on top of open-source Lustre (think cscli)

- GUI and REST API changes - you can do an increasing amount of management operations using the Spectrum Scale GUI or its underlying control-plane REST API. Lustre has the IML GUI, but it isn't treated as a first-class citizen in the same way that Spectrum Scale does and it was not mentioned at the Lustre BOF at all. Again, this is an area where vendors usually value-add their own management on top of community Lustre.

- Improved monitoring, reporting, and phone-home - a framework called "MAPS" was recently introduced to essentially do what Nagios does in most DIY environments--raise alarms for crashes, resource exhaustion, misconfiguration, and the like. It also does performance monitoring and historical data aggregation. As with the other manageability features mentioned, Lustre relies on third-party tools for these features.

For resilience, Spectrum Scale announced new tunable parameters to improve parallel journal recovery:

whereas Lustre announced parallel fsck with major performance improvements to speed up recovery:

Finally, Spectrum Scale showcased its vision to allow Spectrum Scale to be mounted inside containerized environments:

This is actually somewhere that Lustre is quite a bit ahead in some regards because it has long had features like UID/GID mapping and subdirectory mounts that allow for a greater degree of isolation that maps well to untrusted containers.

That all said, Lustre's focus is not on taking on more of these nice-to-have manageability features. When asked about adding basic manageability features like supporting easy addition/removal of Lustre OSTs and OSSes to enable evergreen Lustre systems analogous to Spectrum Scale's mmrestripefs command, the answer was effectively "no." The reason given is that (1) Lustre clients are where files get stitched together, so migration will always have to involve client access, and (2) lfs find and lfs migrate already provide the tools necessary to move data files in theory. From this, I take away that stitching those two lfs commands together into a tool that actually does what mmfsrestripe does is an exercise left to the viewer--or a company who can value-add such a tool on top of their Lustre offering.

3. Performance, scalability, and reliability features that end users may care about

Spectrum Scale didn't have a huge amount to offer in the user-facing performance/scalability/reliability features this year. They improved their support for QOS (which is admittedly fantastic when compared to Lustre's Token Bucket Filter QOSwhich cannot limit IOPS like Spectrum Scale can) from an administrator standpoint, and they have begun to think about how to incorporate TRIMming into flash-based Spectrum Scale deployments to offer reliable performance.

By comparison, Lustre's new features really shine in this department. Andreas Dilger presented this slide near the beginning of his talk:

which reflects significant attention being paid to improving the performance of emerging noncontiguous and otherwise adversarial I/O pattern--perhaps motivated by storage-hungry AI and genomics markets.

Lustre is also introducing features aimed at both scale-up and scale-out, with a 30x speedup in the time it takes to mount petabyte OSTs (likely in preparation for the exascale Lustre installations coming in the next year or two) and automated directory metadata sharding, shrinking, and balancing. From this, it's clear that the primary focus of Lustre continues to be extreme scale and performance above all else, but it's unclear how much of this effort is putting Lustre ahead of Spectrum Scale as much as it is catching up to all the effort that went into making Spectrum Scale scale out to 250 PB for the Summit system.

4. Interface features that platform developers may care about

The newest release of Spectrum Scale introduces improvements to NFS (by adding v4.1 support), CSI (incremental improvements), SMB (incremental improvements), and most surprising to me, HDFS. By comparison, I don't think Lustre directly supports any of these interfaces--you have to use third-party software to expose these protocols--and if they are supported, they aren't under active development.

Overall Impressions

These two presentations pointed to a sharp contrast between how Spectrum Scale and Lustre position themselves as storage systems; IBM's vision for Spectrum Scale is as a high-capacity data lake tier against which a diversity of apps (HPC, containerized services, map-reduce-style analytics) can consume and product data. They even said as much while talking about their HDFS support:

Spectrum Scale AFM improvements were also touted at the user group presentation as a means to enable workflows that span on-premise and public cloud for workloads involving HPC, containerized services, file, and object--no matter where you operate, Spectrum Scale will be there. They showed this logo soup diagram which spoke to this:

and it's clearly aligned with IBM's hybrid cloud corporate strategy. I can see how this vision could be useful based on my experience in industry, but at the same time, this looks like a Rube Goldberg machine with a lot of IBM-specific linchpins that concentrates risk on IBM product support (and licensing costs!) progressing predictably.

Lustre, by comparison, appears to be focused squarely on performance and scale. There was no logo soup or architectural vision presented at the Lustre BOF itself. This is likely a deliberate effort by the Lustre community to focus on being an open-source piece to a larger puzzle that others can package up by anyone with the need or business acumen to do so. Just as Linux itself is just a community effort around which companies like Red Hat (IBM) or SUSE build and market a solution, Lustre should be just one part of an organization's overall data management strategy whereas Spectrum Scale is trying to be the entire answer.

This isn't a value judgment for or against either; Lustre offers more architectural flexibility at the cost of having to do a lot of day-to-day lifting and large-scale architectural design oneself, while Spectrum Scale is a one-stop shop that likely requires fewer FTEs and engineering effort to build infrastructure for complex workflows. The tradeoff, of course, is that Spectrum Scale and its surrounding ecosystem is priced for enterprises, and absent a new pricing scheme that economically scales cost with capacity (hypothetically referred to as "data lake pricing" at the SSUG), the choice of whether to buy into Spectrum Scale or Lustre as a part of a larger data strategy may come down to how expensive your FTEs are.

On a non-technical note, the Lustre BOF certainly felt more community-oriented than the Spectrum Scale UG; the dialog was more collegial and there were no undertones of "customers" demanding answers from "vendors." This is not to say that the SSUG wasn't distinctly more friendly than a traditional briefing; it just felt a bit more IBM-controlled since it was on an IBM WebEx whose registration was moderated by IBM and where all the speakers and question answerers were IBM employees. Perhaps there's no other way in a proprietary product since the vendor ultimately holds the keys to the kingdom.

IO-500 BOF

The IO-500 BOF is one of my favorite events at both ISC and SC each year, but as with the rest of SC'20, this year's IO-500 BOF felt like a quiet affair. I noticed two noteworthy themes:

- I/O performance is being awarded in dimensions beyond just peak I/O bandwidth. There are six awards now being given for first place: 10-node bandwidth, 10-node metadata, 10-node overall, total bandwidth, total metadata, and total overall. This contrasts with Top500 which treats performance in a single dimension (peak HPL) and implicitly perpetuates the position that HPL performance is the only aspect of performance that defines "#1." I quite like the IO-500 approach because it makes it easier to see a multidimensional picture of I/O performance and apply your own value system to the list to decide what combination of hardware and storage system software qualifies as #1.

- The importance of system configuration is elevating in the IO-500 community--defining a system hardware schema, presenting the data uniformly, and establishing standard tools and techniques for collecting this data from the systems running the IO500 benchmark are all on the roadmap for the IO-500 benchmark. Again, this makes the list much more valuable for the purposes of learning something since a properly annotated set of submissions would allow you to understand the effects of, for example, choosing NVMe over SAS SSDs or declustered parity over RAID6 on nonvolatile media.

The final IO-500 list for SC'20 itself didn't change much this time; experimental and proof-of-concept file systems remain dominant in the top 10 positions, and DAOS, WekaFS, and IME carry most of the weight. However the #1 position was a surprise:

A new file system called "MadFS" took the top spot with some ridiculous performance numbers, and frustratingly, there have been no public disclosures about what this file system is or how it works. The IO-500 committee said that they spoke privately with the submitters and felt comfortable that the entry was legitimate, but they were not at liberty to disclose many details since Pengcheng Laboratory is preparing to present MadFS at another venue. They did hint that MadFS drew inspiration from DAOS, but they didn't offer much more.

Peeling the MadFS submission apart does reveal a few things:

- It is a file system attached to Pengcheng Laboratory's Cloudbrain-II system, which is a Huawei Atlas 900 supercomputer packed with Huawei Kungpeng 920 ARM CPUs and Huawei Ascend 910 coprocessors. Cloudbrain-II is a huge system with a huge budget, so it should have a very capable storage subsystem.

- 72 processes were run on each of the 255 client nodes, reaching a peak of2,209,496 MiB/second. This translates to 73 Gbit/sec out of each 100 Gb/s node--pretty darned efficient.

- The MadFS file system used is 9.6 PB in size, and the fastest-running tests (ior-easy-*) ran for a little over six minutes. This corresponds to863 TB read and written in the best case, which is reasonable.

- The ior-easy tests were run using a transfer size of2,350,400 bytes which is a really weird optimization point. Thus, it's unlikely that MadFS is block-based; it probably runs entirely in DRAM or HBM, is log-structured, and/or relies on persistent memory to buffer byte-granular I/O from any underlying block devices.

- The submission indicates that 254 metadata nodes were used, and each node had six storage devices. The submission also says that data servers (of an undefined quantity) has 2 TB NVMe drives.

- Since 255 clients and 254 metadata servers were used, this may suggest that metadata is federated out to the client nodes. This would explain why the metadata rates are so astonishing.

- If the 9.6 PB of NVMe for data was located entirely on the 255 clients, this means each compute node would've had to have had over 37 TB of NVMe after parity. This seems unlikely.

- From this, we might guess that MadFS stores metadata locally but data remotely. This would be a very fragile architecture for important data, but a reasonable one for ephemeral storage akin to UnifyFS.

- MadFS is not ready for prime time, as its statfs(2) returns nonsense data. For example, the MadFS ior-easy-* runs report the file system has zero inodes, while the ior-hard-* runs reported268 trillion inodes all of which are used.

Until more disclosures are made about MadFS and the Cloudbrain-II system though, there's little intellectual value in this IO-500 submission. However the waters are definitely chummed, and I for one will be keeping an eye out for news about this Chinese system.

Finally, although not part of the IO-500 BOF, Microsoft Azure released some benchmark results shortly after about their successful demonstration of over 1 TB/sec using BeeGFS in Azure. This wasn't run to the IO-500 spec so it wouldn't have been a valid submission, but it is the single fastest IOR run in the cloud of which I am aware. This bodes well for the future of parallel file systems in the cloud as a blessed BeeGFS/Azure configuration would compete directly with Amazon FSx for Lustre.

Concluding Thoughts

Virtual SC this year turned out to be far more exhausting than I had anticipated despite the fact that I never had to leave my chair. On the upside, I got to attend SC with my cat for the first time:

and I didn't find myself getting as sweaty running between sessions. On the downside, the whole conference was just weird. The only conference buzz I felt was through the Twitter community due to the total lack of chance encounters, late nights out, early morning briefings, and copious free coffee. The content felt solid though, and I admit that I made heavy use of pause, rewind, and 2x replay to watch things that I would have otherwise missed in-person.

In my past SC recaps I remarked that I get the most out of attending the expo and accosting engineers on the floor, and the complete absence of that made SC feel a lot less whole. As a speaker, the lack of engagement with the audience was very challenging too. The 45-second delay between live video and Q&A made dialog challenging, and there was no way to follow up on questions or comments using the virtual platform. I suppose that is the price to be paid for having an otherwise robust virtual event platform.

Although COVID forced us all into a sub-optimal SC venue this year, I think it also took away a lot of advancements, discussions, and dialog that would've fed a richer SC experience as well. With any luck SC can be in-person again next year and the community will have bounced back and made up for the time lost this year. When SC'21 rolls around, we should have at least one exascale system hitting the floor in the US (and perhaps another in China) to talk about, and the Aurora system should be very well defined. We'll have a few monster all-flash file systems on the I/O front to boot (including one in which I had a had a hand!), and the world will be opening up again--both in the technological sense and the literal sense. The future looks bright.

As always, I owe my sincerest thanks to the organizers of SC this year for putting together the programs that spurred this internal monologue and the dialogues in which I engaged online these past two weeks. I didn't name every person from whom I drew insight, but if you recognize a comment that you made and would like attribution, please do let me know.

Finally, if you'd like to read more, see my recaps of the PDSW'20 workshop and my tiered storage panel.