PDSW'20 Recap

This year was the first all-virtual Parallel Data Systems Workshop, and despite the challenging constraints imposed by the pandemic, it was remarkably engaging. The program itself was contracted relative to past years and only had time for three Work-In-Progress (WIP) presentations, so it was a little difficult to pluck out high-level research trends and themes. However, this year's program did seem more pragmatic, with talks covering very practical topics that had clear connection to production storage and I/O. The program also focused heavily on the HPC side of the community, and the keynote address was perhaps the only talk that focused squarely on the data-intensive data analysis side of what used to be PDSW-DISCS. Whether this is the result of PSDW's return to the short paper format this year, shifting priorities from funding agencies, or some knock-on effect of the pandemic is impossible to say.

Although there weren't any strong themes that jumped out at me, last year's theme of using AI to optimize I/O performance was much more muted this year. Eliakin del Rosario presented a paper describing a clustering and visual analysis tool he developed that underpins a study applying machine learning to develop an I/O performance model presented in the main SC technical program, but there was no work in the direction of applying AI to directly optimize I/O. Does this mean that these ideas have climbed over the hype curve and are now being distilled down into useful techniques that may appear in production technologies in the coming years? Or was the promise of AI to accelerate I/O just a flash in the pan?

In the absence of common themes to frame my recap, what follows are just my notes and thoughts about some of the talks and presentations that left an impression. I wasn't able to attend the WIP session or cocktail hour due to non-SC work obligations (it's harder to signal to coworkers that you're "on travel to a conference" when you're stuck at home just like any other workday) so I undoubtedly missed things, but all slides and papers are available on the PDSW website, and anyone with an SC workshop pass can re-watch the recorded proceedings on the SC20 digital platform.

Keynote - Nitin Agrawal

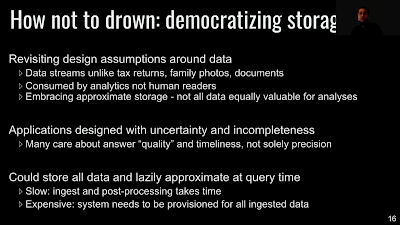

This year’s keynote by Nitin Agrawal was a long-form research presentation on SummaryStore, an “approximate storage system” that doesn't store the data you put in it so much as it stores the data you will probably want to get back out of it at a later date. This notion of a storage system that doesn't actually store things sounds like an affront at a glance, but when contextualized properly, it makes quite a lot of sense:

There are cases where the data being stored doesn't have high value. For example, data may become less valuable as it ages, or data may only be used to produce very rough guesses (e.g., garbage out) so inputting rough data (garbage in) is acceptable. In these cases, the data may not be worth the cost of the media on which it is being stored, or its access latency may be more important than its precision; these are the cases where an approximate storage system may make sense.

The specific case presented by Dr. Agrawal, SummaryStore, strongly resembled a time series database to feed a recommendation engine that naturally weighs recent data more heavily than older data. The high-level concept seemed a lot like existing time series telemetry storage systems where high-frequency time series data are successively aggregated as they age so that new data may be sampled every few seconds while old data may be sampled once an hour.

For example, LMT and mmperfmon are time series data collection tools for measuring the load on Lustre and Spectrum Scale file systems, respectively. The most common questions I ask of these tools are things like

- What was the sum of all write bytes between January 2018 and January 2019?

- How many IOPS was the file system serving between 5:05 and 5:10 this morning?

Because these approximate storage systems are specifically designed with an anticipated set of queries in mind, much of Agrawal's presentation really spoke to implementation-specific challenges he faced while implementing SummaryStore--things like how SummaryStore augmented bloom filter buckets with additional metadata to allow approximations of sub-bucket ranges to be calculated. More of the specifics can be found in the presentation slides and references therein.

This notion of approximate storage is not new; it is preceded by years of research into semantic file systems, where the way you store data is driven by the way in which you intend to access the data. By definition, these are data management systems that are tailor-made for specific, high-duty cycle I/O workloads such as web service backends.

What I took away from this presentation is that semantic file systems (and approximate storage systems by extension) aren't intrinsically difficult to build for these specific workloads. Rather, making such a system sufficiently generic in practice to be useful beyond the scope of such a narrow workload is where the real challenge lies. Tying this back to the world of HPC, it's hard to see where an approximate storage system could be useful in most HPC facilities since their typical workloads are so diverse. However, two thoughts did occur to me:

- If the latency and capacity characteristics of an approximate storage system are so much better than generic file-based I/O when implemented on the same storage hardware (DRAM and flash drives), an approximate storage system could help solve problems that traditionally were limited by memory capacity. DNA sequence pattern matching (think BLAST) or de novo assembly could feasibly be boosted by an approximate index.

- Since approximate storage systems are purpose-built for specific workloads, the only way they fit into a general-purpose HPC environment is using purpose-built composable data services. Projects like Mochi or BespoKV provide the building blocks to craft and instantiate such purpose-built storage systems, and software-defined storage orchestration in the spirit of DataWarp or the Cambridge Data Accelerator would be needed to spin up an approximate storage service in conjunction with an application that would use it.

I'm a big believer in #2, but #1 would require a forcing function coming from the science community to justify the effort of adapting an application to use approximate storage.

Keeping It Real: Why HPC Data Services Don't Achieve I/O Microbenchmark Performance

Phil Carns (Argonne) presented a lovely paper full of practical gotchas and realities surrounding the idea of establishing a roofline performance model for I/O. The goal is simple: measure the performance of each component in an I/O subsystem's data path (application, file system client, network, file system server, storage media), identify the bottleneck, and see how close you can get to hitting the theoretical maximum of that bottleneck:

- There's a 40% performance difference between the standard OSU MPI bandwidth benchmark and what happens when you make the send buffer too large to fit into cache. It turns out that actually writing data over the network from DRAM (as a real application would) is demonstrably slower than writing data from a tiny cacheable memory buffer.

- Binding MPI processes to cores is good for MPI latency but can be bad for I/O bandwidth. Highly localized process placement is great if those processes talk to each other, but if they have to talk to something off-chip (like network adapters), the more spread out they are, the greater the path diversity and aggregate bandwidth they may have to get out of the chip.

- O_DIRECT bypasses page cache but not device cache, while O_SYNC does not bypass page cache but flushes both page and device caches. This causes O_DIRECT to reduce performance for smaller I/Os which would benefit from write-back caching when used by itself, but increase performance when used with O_SYNC since one less cache (the page cache) has to be synchronized on each write. Confusing and wild. And also completely nonstandard since these are Linux-specific flags.

Towards On-Demand I/O Forwarding in HPC Platforms

This FORGE service is ephemeral in that it is spun up at the same time your MPI application is spun up and persists for the duration of the job. However unlike traditional MPI-IO-based collectives, it runs on dedicated nodes, and it relies on a priori knowledge of the application's I/O pattern to decide what sorts of I/O reordering would benefit the application.

- Have a very IOPS-heavy, many-file workload? Since these tend to be CPU-limited, it would make sense to allocate a lot of FORGE nodes to this job so that you have a lot of extra CPU capacity to receive these small transactions, aggregate them, and drive them out to the file system.

- Have a bandwidth-heavy shared-file workload? Driving bandwidth doesn't require a lot of FORGE nodes, and fewer nodes means fewer potential lock conflicts when accessing the shared file.

Fractional-Overlap Declustered Parity

NVIDIA GPUDirect Storage Support in HDF5

In the above diagram, "SEC2" refers to the default POSIX interface, "DIRECT" is POSIX using O_DIRECT, and "GDS" is GPUDirect Storage. What surprised me here is that all of the performance benefits were expressed in terms of bandwidth, not latency--I naively would have guessed that not having to bounce through host DRAM would enable much higher IOPS. These results made me internalize that the performance benefits of GDS lie in not having to gum up the limited bandwidth between the host CPU and host DRAM. Instead, I/O can enjoy the bandwidth of HBM or GDDR to the extent that the NVMe buffers can serve and absorb data. I would hazard that in the case of IOPS, the amount of control-plane traffic that has to be moderated by the host CPU undercuts the fast data-plane path enabled by GDS. This is consistent with literature from DDN and VAST about their performance boosts from GDS.

Fingerprinting the Checker Policies of Parallel File Systems

The practical outcome of this work is that it identified a couple of data structures and corruption patterns that are particularly fragile on Lustre and BeeGFS. Alarmingly, two cases triggered kernel panics in lfsck which led me to beg the question: why isn't simple fault injection like this part of the regular regression testing performed on Lustre? As someone who's been adjacent to several major parallel file system outages that resulted from fsck not doing a good job, hardening the recovery process is a worthwhile investment since anyone who's having to fsck in the first place is already having a bad day.