Understanding random read performance along the RAIDZ data path

Although I've known a lot of the parameters and features surrounding ZFS since its relative early days, I never really understood why ZFS had the quirks that it had. ZFS is coming to the forefront of HPC these days though--for example, the first exabyte file system will use ZFS--so a few years ago I spent two days at the OpenZFS Developer Summit in San Francisco learning how ZFS works under the hood.

Two of the biggest mysteries to me at the time were

If this stuff is interesting to you, I strongly recommend getting involved with the OpenZFS community. It's remarkably open, welcoming, and inclusive.

These definitions of stripes, blocks, and sectors are mostly standardized in ZFS parlance and I will try my best to use them consistently in the following discussion. Whether a block is comprised of stripes, or if a block is a stripe (or perhaps a block is just comprised of the data sectors of stripes?) remains a little clear to me. It also doesn't help that ZFS has a notion of records (as in the recordsize parameter) which determine the maximum size of blocks. Maybe someone can help completely disentangle these terms for me.

where

This read-modify-write penalty only happens when modifying part of an existing block; the first time you write a block, it is always a full-stripe write.

This read-modify-write penalty is why IOPS on ZFS are awful if you do sub-block modifications; every single write op is limited by the slowest device in the RAIDZ array since you're reading the whole stripe (so you can copy-on-write it). This is different from traditional RAID, where you only need to read the data chunk(s) you're modifying and the parity chunks, not the full stripe, since you aren't required to copy-on-write the full stripe.

where skip sectors (denoted by X boxes in the above figure) are used to pad out the partially populated stripe. As you can imagine, this can waste a lot of capacity. Unlike traditional RAID, ZFS is still employing copy-on-write so you cannot fill DRAID's skip sectors after the block has been written. Any attempt to append to a half-populated block will result in a copy-on-write of the whole block to a new location.

Because we're still doing copy-on-write of whole blocks, the write IOPS of DRAID is still limited by the speed of the slowest drive. In this sense, it is no better than RAIDZ for random write performance. However, DRAID does do something clever to avoid the worst-case scenario of when a single sector is being written. In our example of DRAID 4+1, instead of wasting a lot of space by writing three skip sectors to pad out the full stripe:

DRAID doesn't bother storing this as 4+1; instead, it redirects this write to a different section of the media that stores data as mirrored blocks (a mirrored metaslab), and the data gets stored as

This also means that the achievable IOPS for single-sector read operations on data that was written as single-sector writes is really good since all that data will be living as mirrored pairs rather than 4+1 stripes. And since the data is stored as mirrored sectors, either sector can be used to serve the data, and the random read performance is governed by the speed of the fastest drive over which the data is mirrored. Again though, this IOPS-optimal path is only used when data is being written a single sector at a time, or the data being read was written in this way.

Two of the biggest mysteries to me at the time were

- What exactly does a "variable stripe size" mean in the context of a RAID volume?

- Why does ZFS have famously poor random read performance?

It turns out that the answer to these are interrelated, and what follows are notes that I took in 2018 as I was working through this. I hope it's all accurate and of value to some budding storage architect out there.

If this stuff is interesting to you, I strongly recommend getting involved with the OpenZFS community. It's remarkably open, welcoming, and inclusive.

The ZFS RAIDZ Write Penalty

Writing Data

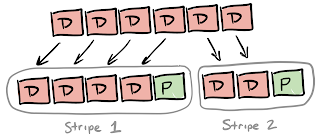

When you issue a write operation to a file system on a RAIDZ volume, the size of that write determines the size of the file system block. That block is divided into sectors whose size is fixed and governed by the physical device sector size (e.g., 512b or 4K). Parity is calculated across sectors, and the data sectors + parity sectors are what get written down as a stripe. If the number of drives in your RAIDZ group (e.g., 10) is not an even multiple of (D+P)/sectorsize, you may wind up with a stripe that has P parity sectors but fewer than D data sectors at the end of the stripe. For example, if you have a 4+1 but write down six sectors, you get two stripes that are comprised of six data sectors and two parity sectors: |

| ZFS RAIDZ variable stripe for a six-sector block |

These definitions of stripes, blocks, and sectors are mostly standardized in ZFS parlance and I will try my best to use them consistently in the following discussion. Whether a block is comprised of stripes, or if a block is a stripe (or perhaps a block is just comprised of the data sectors of stripes?) remains a little clear to me. It also doesn't help that ZFS has a notion of records (as in the recordsize parameter) which determine the maximum size of blocks. Maybe someone can help completely disentangle these terms for me.

Rewriting Data

The ZFS read-modify-write penalty only happens when you try to modify part of a block; that happens because the block is the smallest unit of copy-on-write, so to modify part of a block, you need to read-modify-write all of the D sectors. The way this works looks something like: |

| Read-modify-write in RAIDZ |

where

- The data sectors of the whole block are read into memory, and its checksum is verified. The parity sectors are NOT read or verified at this point since (1) the data integrity was just checked via the block's checksum and (2) parity has to be recalculated on the modified block's data sectors anyway.

- The block is modified in-memory and a new checksum is calculated, and new parity sectors are calculated.

- The entire block (data and parity) is written to newly allocated space across drives, and the block's new location and checksum are written out to the parent indirect block.

This read-modify-write penalty only happens when modifying part of an existing block; the first time you write a block, it is always a full-stripe write.

This read-modify-write penalty is why IOPS on ZFS are awful if you do sub-block modifications; every single write op is limited by the slowest device in the RAIDZ array since you're reading the whole stripe (so you can copy-on-write it). This is different from traditional RAID, where you only need to read the data chunk(s) you're modifying and the parity chunks, not the full stripe, since you aren't required to copy-on-write the full stripe.

Implications of RAIDZ on Performance and Design

This has some interesting implications on the way you design a RAIDZ system:- The write pattern of your application dictates the layout of your data across drives, so your read performance is somewhat a function of how your data was written. This contrasts with traditional RAID, where your read performance is not affected by how your data was originally written since it's all laid out in fixed-width stripes.

- You can get higher IOPS in RAIDZ by using smaller stripe widths. For example, a RAIDZ 4+2 would result in higher overall IOPS than a RAIDZ 8+2 since 4+2 is half as likely to have a slow drive as the 8+2. This contrasts with traditional RAID, where a sub-stripe write isn't having to read all 4 or 8 data chunks to modify just one of them.

How DRAID changes things

An entirely new RAID scheme, DRAID, has been developed for ZFS which upends a lot of what I described above. Rather than using variable-width stripes to optimize write performance, DRAID always issues full-stripe writes regardless of the I/O size being issued by an application. In the example above when writing six sectors worth of data to a 4+1, DRAID would write down: |

| Fixed-width stripes with skip sectors as implemented by ZFS DRAID |

where skip sectors (denoted by X boxes in the above figure) are used to pad out the partially populated stripe. As you can imagine, this can waste a lot of capacity. Unlike traditional RAID, ZFS is still employing copy-on-write so you cannot fill DRAID's skip sectors after the block has been written. Any attempt to append to a half-populated block will result in a copy-on-write of the whole block to a new location.

Because we're still doing copy-on-write of whole blocks, the write IOPS of DRAID is still limited by the speed of the slowest drive. In this sense, it is no better than RAIDZ for random write performance. However, DRAID does do something clever to avoid the worst-case scenario of when a single sector is being written. In our example of DRAID 4+1, instead of wasting a lot of space by writing three skip sectors to pad out the full stripe:

DRAID doesn't bother storing this as 4+1; instead, it redirects this write to a different section of the media that stores data as mirrored blocks (a mirrored metaslab), and the data gets stored as

This also means that the achievable IOPS for single-sector read operations on data that was written as single-sector writes is really good since all that data will be living as mirrored pairs rather than 4+1 stripes. And since the data is stored as mirrored sectors, either sector can be used to serve the data, and the random read performance is governed by the speed of the fastest drive over which the data is mirrored. Again though, this IOPS-optimal path is only used when data is being written a single sector at a time, or the data being read was written in this way.